The B-Human project has been participating in the RoboCup robot football competitions and its regional offshoots in the Standard Platform League since 2009. In this league, all teams use the humanoid robot Nao from SoftBank Robotics in order to guarantee the same hardware requirements. Thus, the work of the project focuses primarily on further developing and improving the software of the robots. This includes various different tasks, such as image recognition, localisation and autonomous agents. Building on the software of its predecessors, the B-Human project has won the RoboCup GermanOpen nine times so far. B-Human has also been extremely successful internationally, bringing home a total of seven world championship titles, the most recent in 2019 from Sydney.

Due to this year’s Corona pandemic and the accompanying contact restrictions, the Robocup unfortunately had to be cancelled for this year. The German Open in Magdeburg was postponed until September. Since the project day can only take place digitally, we have made a few videos for you instead, which should give you an insight into the various areas of activity of the project. If you are interested, you can also watch real football matches that we recorded at past competitions on our channel.

German Open Replacement Event 2022

From 11.04.2022 to 17.04.2022, the B-Human project participated in GORE 2022, which took place at the Hamburg Chamber of Commerce. Besides the RoboCup in July, the GORE is definitely one of the highlights during the project. Not only did teams from all over Germany take part, but also from Italy, Australia and Ireland, which were provided with robots and actively supported by the teams present on site. GORE stands for German Open Replacement Event, as the German Open could not take place as usual due to the Corona pandemic in recent years.

After two and a half days of setup, the games began for B-Human on Friday. Although there was a lot of code to improve and bugs to fix during the tournament, the games went great from the beginning and our NAOs were able to win the overall title without losing or scoring any goals!

We are all very proud of our performance and would especially like to thank our coaches Tim Laue and Thomas Röfer as well as the B-Human Alumni, who are so actively involved in the project and make such successes possible! Despite a few absences due to illness among the project participants, there were enough hands to ensure that everything ran smoothly before, between and after the games.

We still have some things to improve before the RoboCup, so that we can hopefully build on this success.

Coordinated passing and positioning

by Jo Lienhoop

A robot in possession of the ball must constantly decide between different actions:

- dribble in one direction,

- pass to a teammate,

- or shoot at the goal.

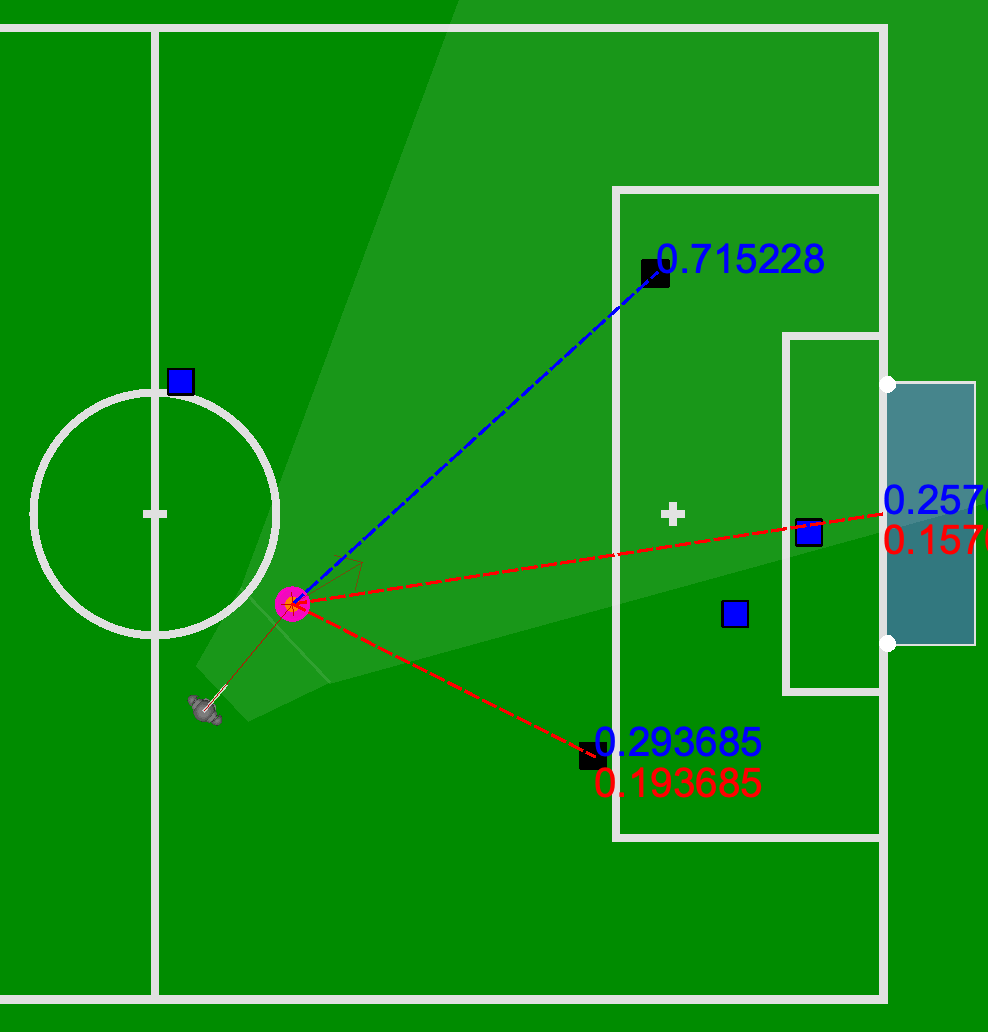

For this purpose, I have constructed evaluation functions to weigh up goal scoring and passing options against each other.

Intuitively, we assess:

- “How likely is it that the pass will arrive?”,

- “How likely is it that the goal shot will be successful?”.

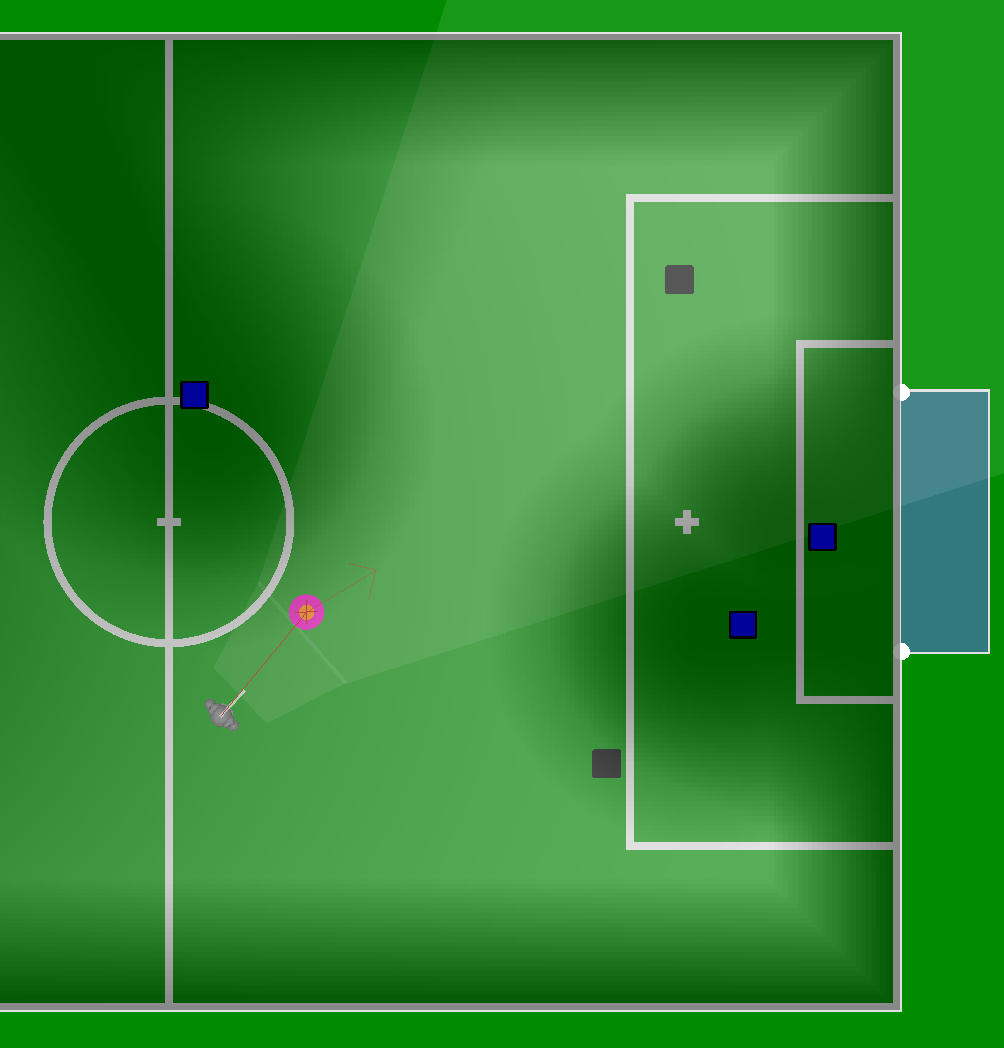

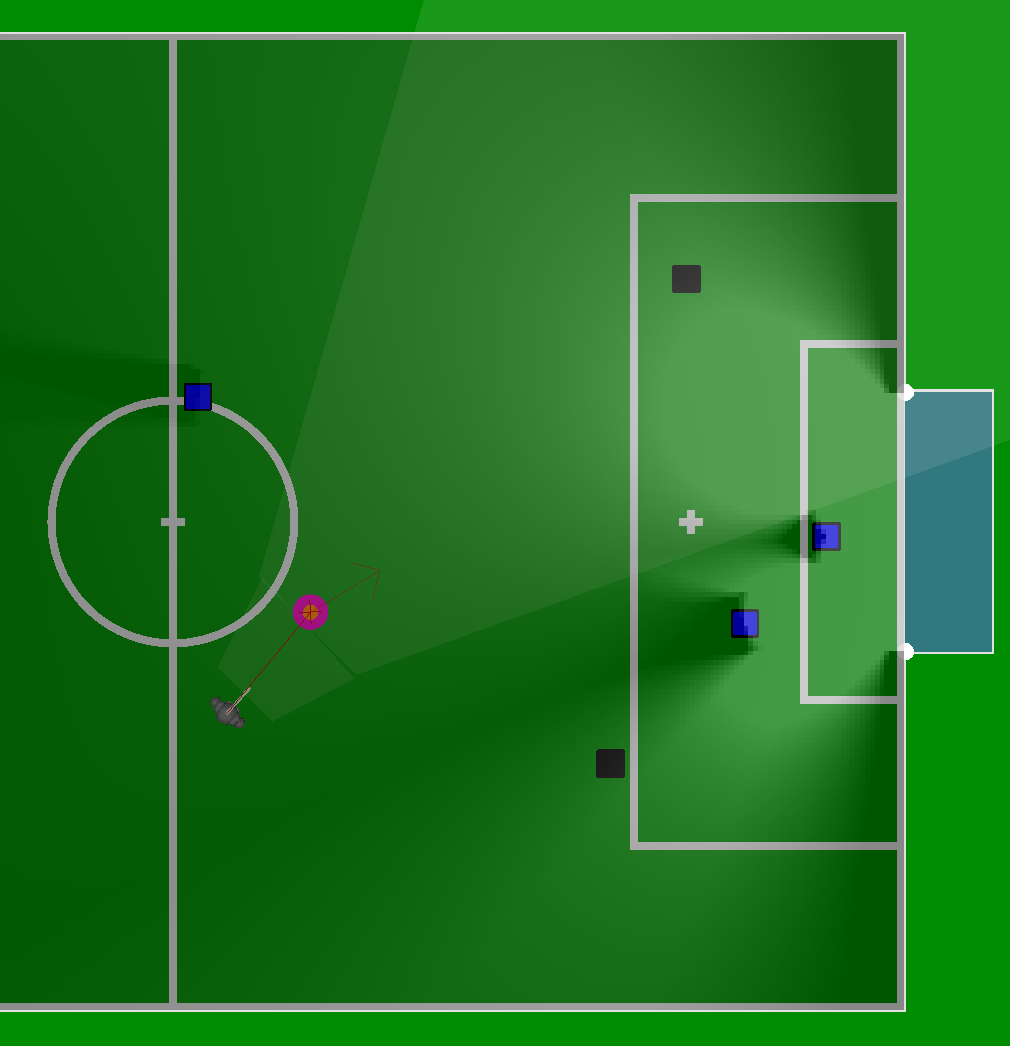

If you generate ratings from 0 to 1 for positions of a grid on the field, you can interpolate them in colour from dark to light and visualise them as a heat map.

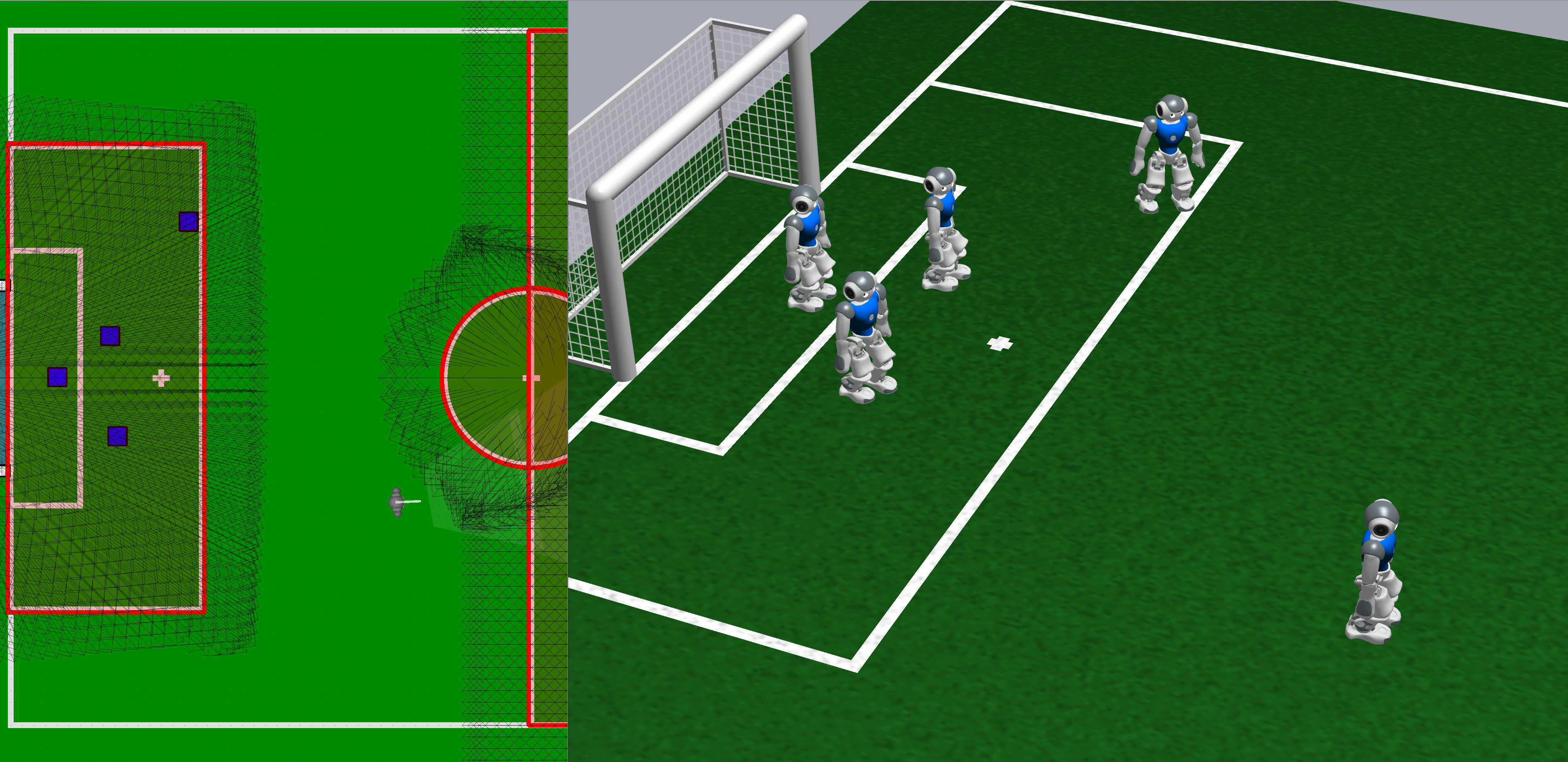

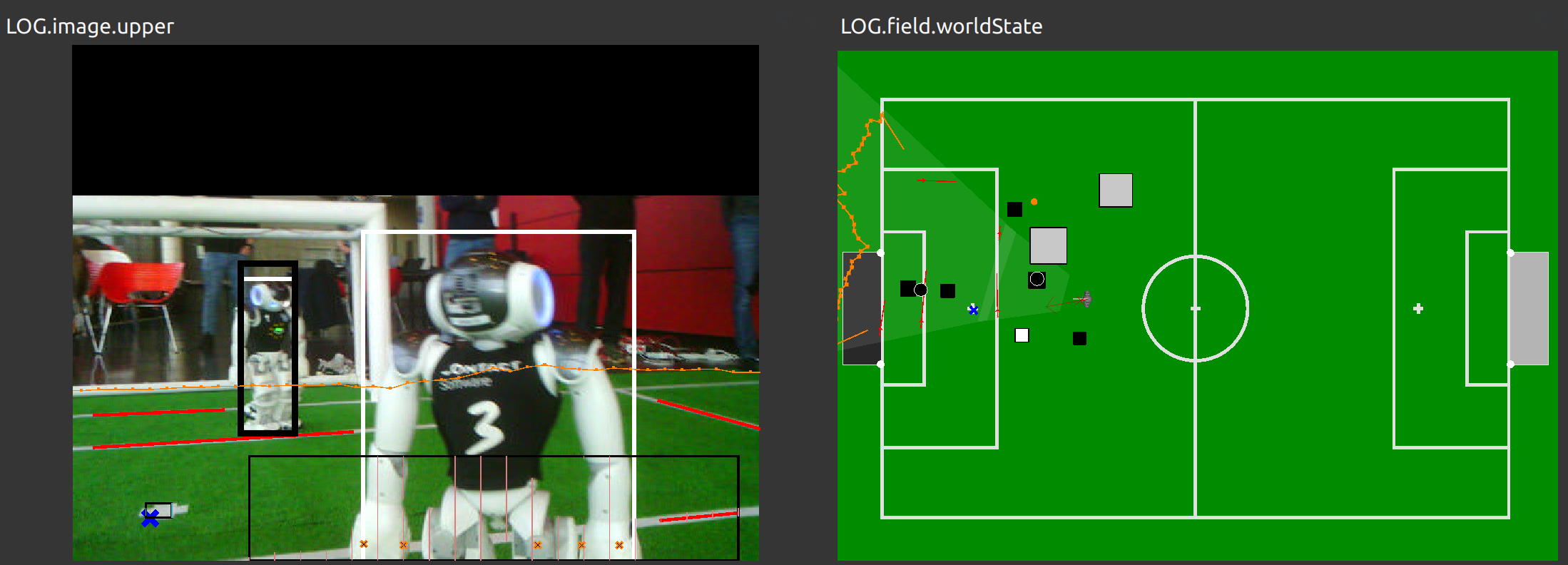

A typical game situation from the simulator

World model of the ball playing robot overlaid with heatmap of the evaluation function for passing opportunities.

World model of the ball-playing robot overlaid with heat map of the evaluation function for goal scoring opportunities.

Evaluation of the two combined evaluation functions as part of the strategy in behaviour.

In the future, the players should be able to create space when they are not currently in possession of the ball. To do this, they can position themselves in such a way that their own rating is maximized, making them attractive for a pass followed by a shot on goal.

PenaltyMarkClassifier

by Finn M. Ewers and Simon Werner

The aim of this project is to recognise penalty marks with a neural network in order to improve the localisation of robots on the pitch. Penalty marks are already detected quite reliably using conventional image processing methods. However, candidate regions for this have to be chosen quite conservatively in order to be able to detect them, which means that penalty marks are quite rarely detected at all.

We have created a dataset of over 35,000 images and trained a convolutional neural network on it, which delivers very good results even on candidate regions at greater distances. In contrast to the previous recogniser, our module can reliably recognise penalty points up to a distance of 3.5m. In addition, it is planned to adapt the module that provides the candidate regions in such a way that more candidates can also be detected at close range.

Figure: Localisation in space

Video: Improved range of the penalty point detector

Klassifikation von Linienkreuzungen

by Laurens Schiefelbein

One means for the robots to know their position on the field is the detection of line intersections. At the moment, this is done via the IntersectionsProvider, which detects intersections of field lines. However, the current classification of these recognised intersections is still relatively unreliable.

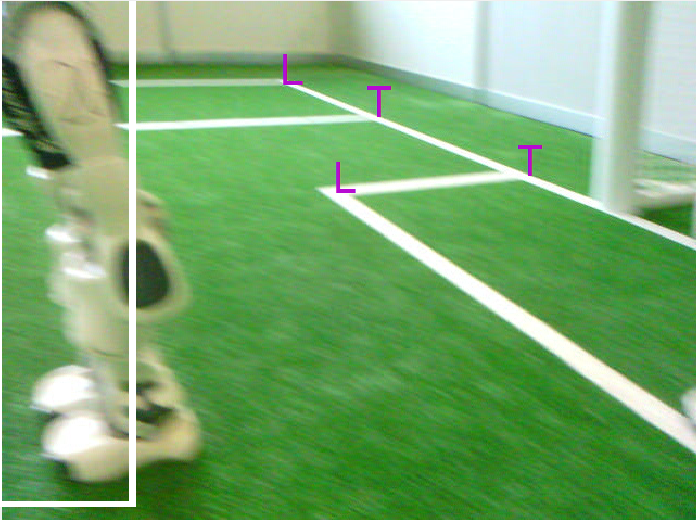

Figure: Classification of line crossings

The aim of this project is to replace the current classifier, which uses conventional image processing methods, with a machine learning classifier. A Convolutional Neural Network is trained, which receives candidate regions of line intersections from the IntersectionsProvider and classifies them into three different classes: T-intersections, L-intersections and X-intersections.

Figure: One training picture each for the three classes

The training data can be extracted from the logs of previous tournaments and test matches, which greatly simplifies the collection of the several thousand images.

Recognition of teammates and opponents based on image processing methods

by Florian Scholz and Lennart Heinbokel

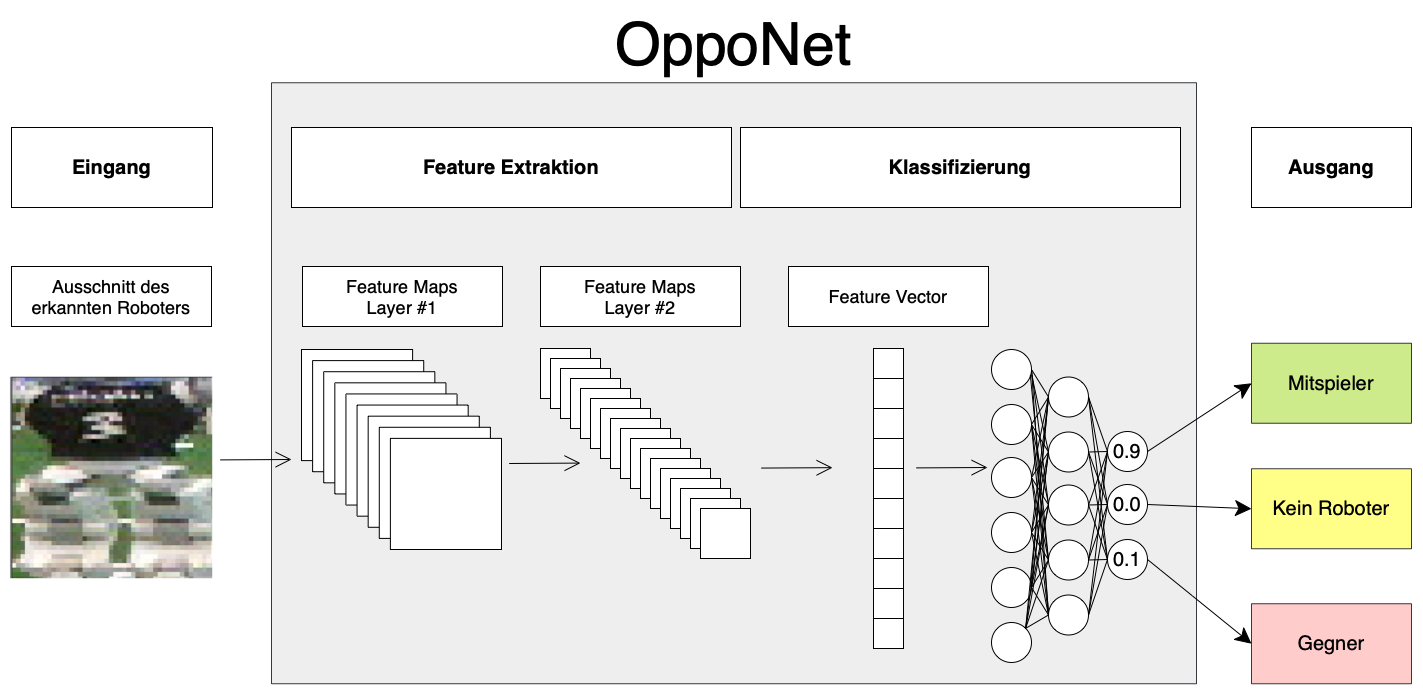

Just like in regular football, it is also an advantage in robot football if you as a player can see who your teammates are and who your opponents are. In B-Human, however, one’s own teammates have not been identified in the past using image processing methods. Robots are localised in the camera data using Deep Learning methods, but it is not classified at this stage whether the detected robot is a B-Human or one of the opposing team. In the past, this was determined by each robot informing all team members of its current position via network communication.

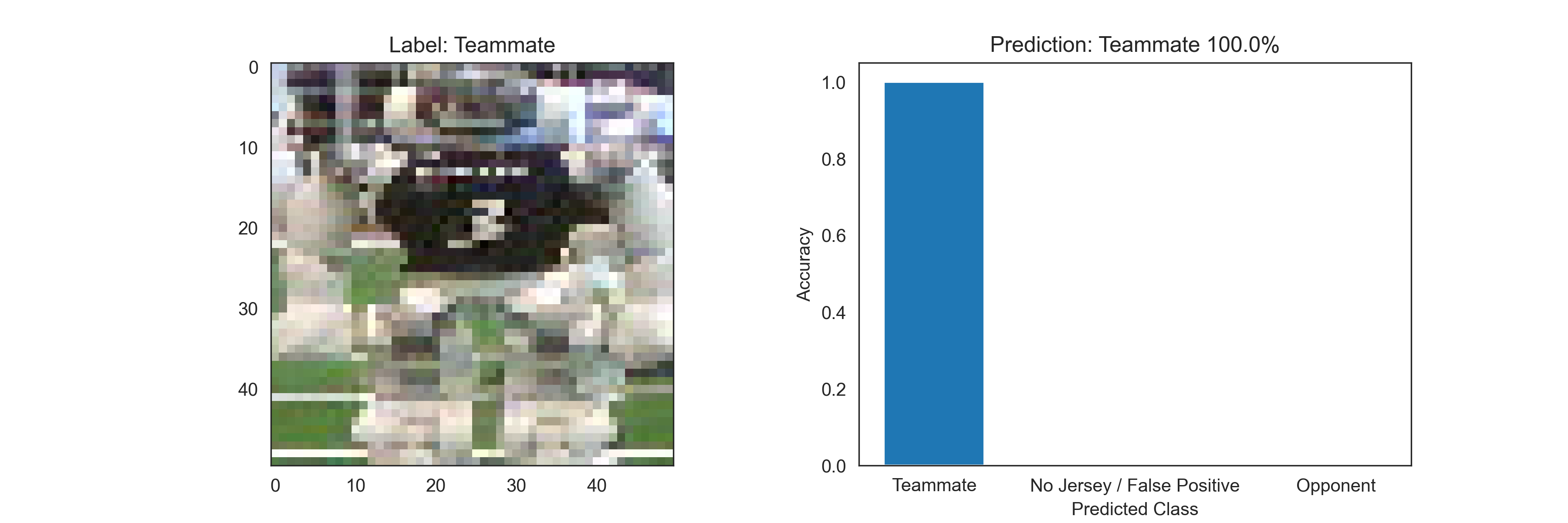

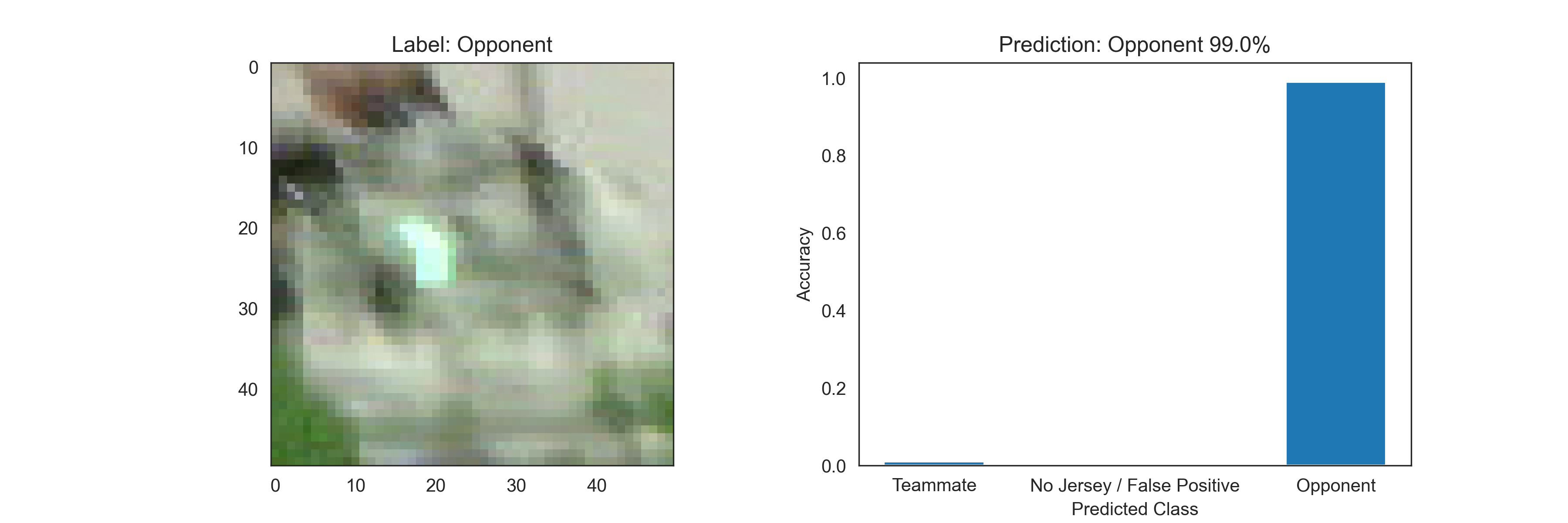

In the past, convincing results could be achieved with this approach. However, this year’s new rules of the Robot Football World Cup severely restrict the possibilities of intra-team communication, which means that this approach cannot be used in the future. Due to this rule change, a computer vision-based approach was implemented during this project, which decides on the basis of the image section of a detected robot whether it is a teammate, an enemy, or even a false positive of the robot detector.

Figure 1: OppoNet architecture

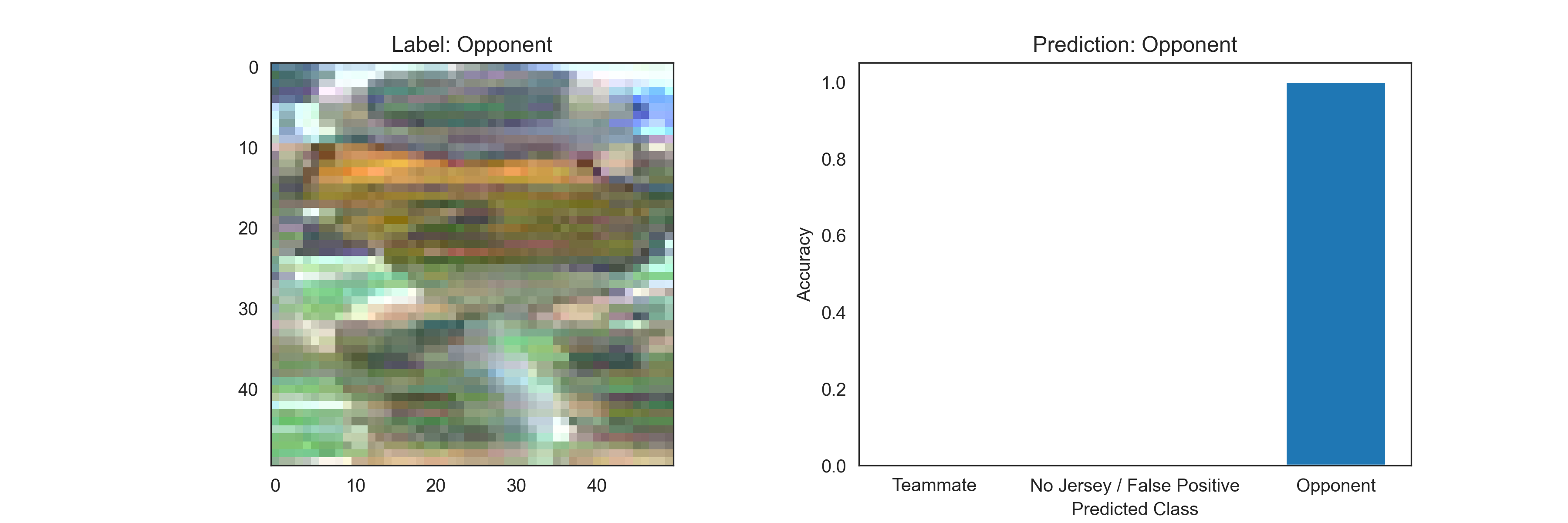

However, the classification of the jersey colours is less trivial than it sounds at first, because the colours of the robots are not so easy to recognise, even for us humans, on the small sections that were taken in a wide variety of lighting conditions and sometimes show strong motion blur. Nevertheless, the model implemented in TensorFlow currently assigns ~95% of all examples in the test data to the correct class. The following figures show some examples of input data and output of the model.

Figure 2: Example of the prediction of the model

Figure 3: Example of the prediction of the model

Figure 4: Example of the prediction of the model

In addition to the development of the actual model, the acquisition, labelling and preparation of the required data was dealt with. To generate examples for the machine learning problem, 15 thousand images of robots from different RoboCup teams from recordings of RoboCup competitions in previous years have been used to date. The data used was versioned and stored using the Data Versioning Control library.

Visual Referee Challenge

by Michelle Gusev, Ayleen Lührsen and Sina Schreiber

Within the framework of the RoboCup, so-called Technical Challenges also take place from time to time. These are separate from the normal 5vs5 games and may require the implementation of completely different functions and behaviours. One of this year’s challenges is the Visual Referee Challenge, in which the robot faces a human referee and has to assign gestures made by the referee to a situation in the game and to the corresponding team by imitating the pose and emitting it acoustically. Examples of this would be: “Goal Left Team” or “Penalty Kick Right Team” (penalty kick).

For this task, we deliberately decided against training our own neural network that recognises the poses, as this would have involved a lot of effort in terms of the widest possible range of training data and labelling. Instead, we use MoveNet. This is a network that recognises people and the position of their essential body parts in pictures and videos. We then use these positions of the joints to check, based on the distance and angle of the shoulder-elbow-wrist, whether the referee’s current posture corresponds to one of the predefined poses. For this purpose, several rules regarding the distances and angles of two joints have been stored for each of the poses. Still to be done is the installation of a “memory function”, which enables us to stabilise the recognition, as the recognised keypoints currently still often jump around or tremble. This still has to be compensated for before the RoboCup.

Leaving illegal areas

by Jo Lienhoop and Sina Schreiber

During a RoboCup match, there are areas on the field that the robots are not allowed to enter. Entering these areas results in a time penalty, which is to be prevented. Among other things, according to the rules, robots are not allowed to enter

- the opponents’ half, during a kick-off

- the centre circle, during an opponent’s kick-off

- the own penalty area, by more than three players of the own team

- the ball area, during a kick-in or free kick by the opposing team.

An illegal area is visualised as a red rectangle in the robot’s world view. If the robot detects that its current position is in an illegal area, it leaves it laterally by running along a potential field. If a robot is about to enter an illegal area, it simply stops and does not enter the area. It should be noted that the 5 cm wide field lines are part of the respective area and have been extended by us to include a buffer area of 20 cm. If the robot wanted to go to the ball before, it aligns itself after leaving the area in such a way that it can observe it standing and runs directly to it as soon as the ball has been released.